Method Overview

We propose RIFF and iRIFF, reliable and generalizable methods to extract semantic features from rectified flow models that significantly improve downstream task performance compared to existing semantic feature extractors. Features from large-scale image generative models are known to encode rich semantic information as recently demonstrated by many methods leveraging diffusion models as general feature extractors. However, existing methods have several limitations that we wish to overcome: they often require fine-tuning or combining multiple pre-trained models to achieve better performance. Instead, our approach is the first to extract and analyze features from rectified flow models, which leads to significantly improved downstream quality without additional bells and whistles. We employ a flow inversion mechanism to improve feature quality further and enhance the robustness of feature extraction by aligning the input noise with the data. In addition to achieving state-of-the-art results in zero-shot semantic correspondence, we extend the established set of feature benchmarks by vision-language grounding tasks for both images and videos and propose a novel grounding technique, purely based on cross-attention and without requiring changes to the existing models. We show that stop words can be used to attract and filter out attention pollution. Our results show that rectified flow features significantly outperform previous works for zero-shot grounding without introducing additional fine-tuning or components.

The Challenge: While diffusion models have revolutionized image generation, extracting meaningful semantic features from these models remains challenging. Existing methods typically rely on older diffusion architectures like Stable Diffusion 2.1, require extensive fine-tuning, or need to combine multiple large pre-trained models to achieve good performance.

The Opportunity: State-of-the-art image generators are shifting from diffusion models to rectified flow models, which offer more efficient and direct sampling through deterministic integration. However, no prior work has successfully extracted and analyzed semantic features from these newer architectures.

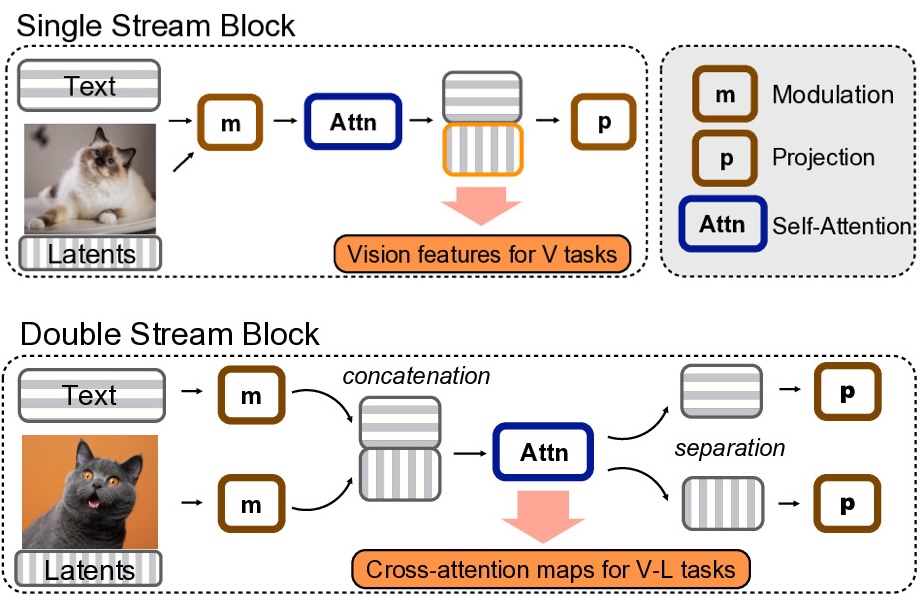

Our Solution: We introduce the first methods to extract high-quality semantic features from rectified flow models, specifically targeting DiT (Diffusion Transformer) architectures used in modern generators like FLUX and Mochi. Our approach is embarrassingly simple yet highly effective, achieving significant improvements across multiple benchmarks without requiring fine-tuning or model combinations.

We are the first to successfully extract semantic features from rectified flow models, unlocking the potential of modern generative architectures for computer vision tasks.

Our iRIFF variant uses flow inversion to obtain structured latents that align with the data distribution, significantly improving feature quality and robustness.

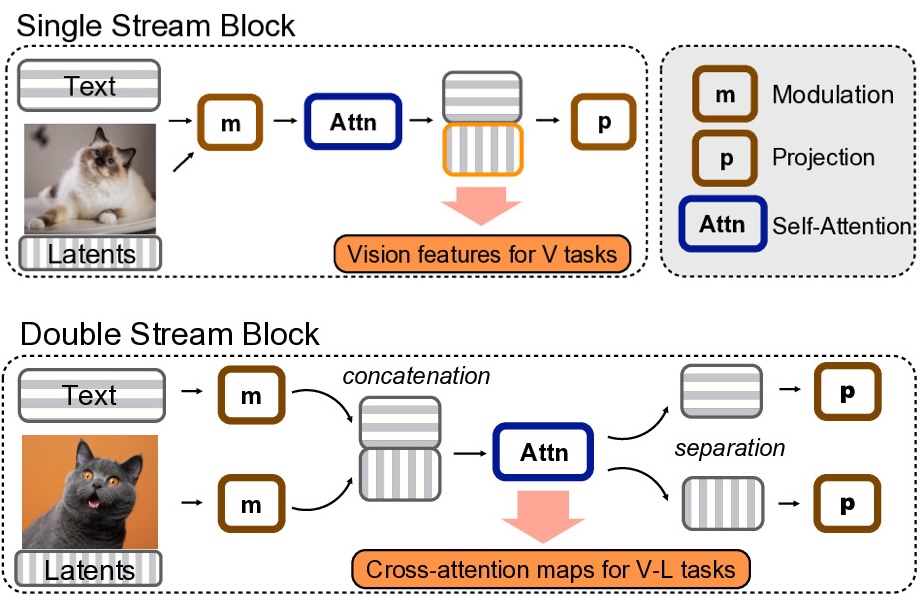

We discover and exploit how stop words "pollute" cross-attention maps, using them as attention magnets to improve vision-language grounding.

We extend semantic feature evaluation to both images and videos, achieving state-of-the-art results in zero-shot settings across multiple benchmarks.

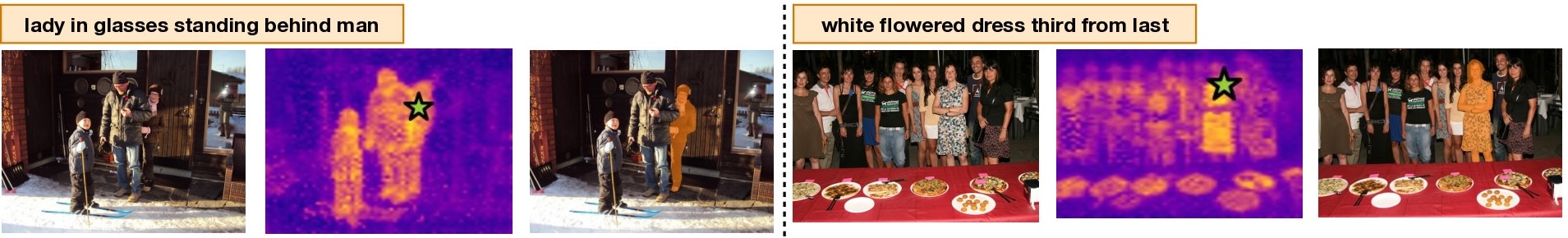

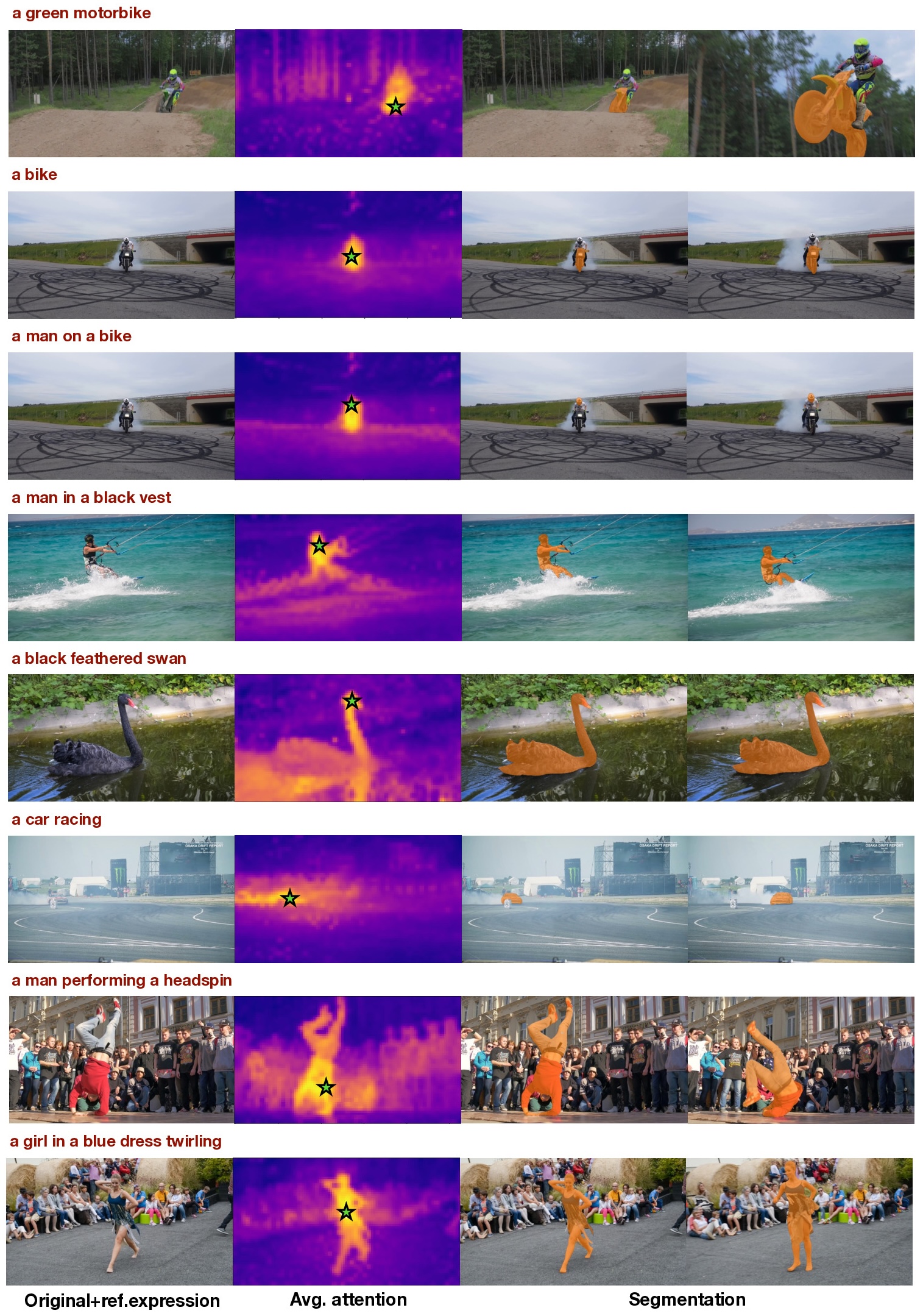

A key discovery in our work is that stop words (e.g., "the", "a", "of") act as attention magnets in cross-attention maps, absorbing significant attention scores and creating noisy backgrounds that hurt segmentation quality. We exploit this phenomenon by strategically adding extra stop words to referral expressions, which further concentrate the attention pollution, and then filtering out all stop words to obtain cleaner attention maps. This simple yet effective technique dramatically improves the quality of attention-based segmentation across both image and video domains, leading to more precise object localization.

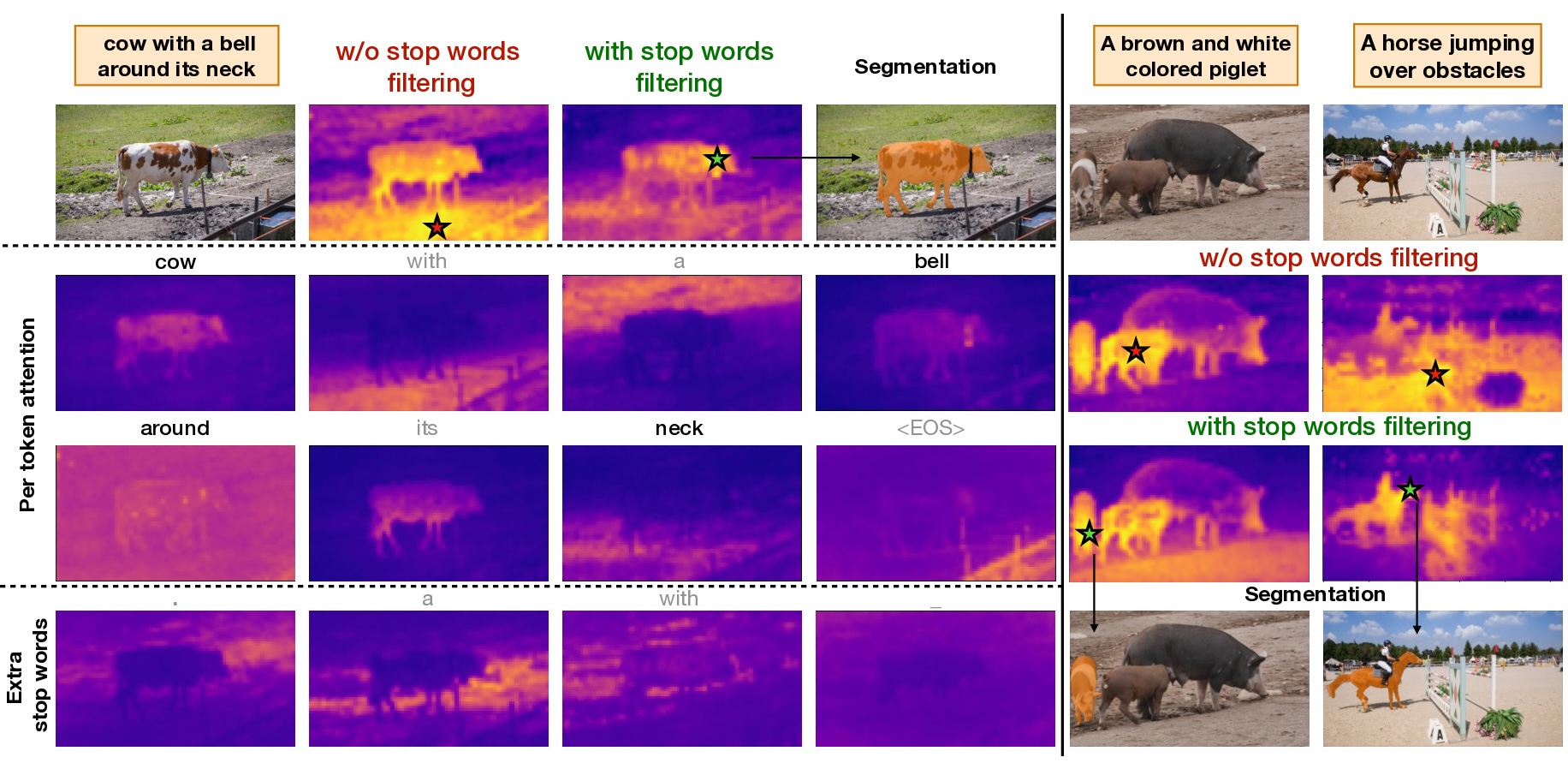

On semantic correspondence benchmarks (SPair-71k, PF-Pascal), our methods establish new state-of-the-art results. iRIFF consistently outperforms RIFF, demonstrating the importance of proper latent alignment through flow inversion. Compared to previous single-model approaches like DIFT, we achieve substantial improvements while using a much simpler pipeline - no fine-tuning, no model combinations, just better base models and smarter feature extraction.

| Method | Plane | Bicycle | Bird | Boat | Bottle | Bus | Car | Cat | Chair | Cow | Dog | Horse | Motorbike | Person | Plant | Sheep | Train | TV | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DINOv2 | 53.5 | 54.0 | 60.2 | 35.5 | 44.4 | 36.3 | 31.7 | 61.3 | 37.4 | 54.7 | 52.5 | 51.5 | 48.8 | 48.2 | 37.8 | 44.1 | 47.4 | 38.2 | 46.5 |

| DIFT | 63.5 | 54.5 | 80.8 | 34.5 | 46.2 | 52.7 | 48.3 | 77.7 | 39.0 | 76.0 | 54.9 | 61.3 | 53.3 | 46.0 | 57.8 | 57.1 | 71.1 | 63.4 | 57.7 |

| SD + DINOv2 | 73.0 | 64.1 | 86.4 | 40.7 | 52.9 | 55.0 | 53.8 | 78.6 | 45.5 | 77.3 | 64.7 | 69.7 | 63.3 | 69.2 | 58.4 | 67.6 | 66.2 | 53.5 | 64.0 |

| RIFF (ours) | 72.6 | 62.8 | 80.1 | 44.7 | 50.0 | 64.8 | 56.1 | 82.8 | 45.7 | 79.6 | 65.6 | 67.2 | 65.9 | 64.0 | 57.0 | 58.0 | 70.5 | 61.6 | 63.9 |

| iRIFF (ours) | 73.8 | 63.5 | 84.2 | 45.0 | 53.2 | 66.2 | 55.8 | 83.6 | 46.7 | 81.0 | 64.1 | 70.7 | 69.2 | 69.0 | 55.5 | 61.0 | 68.1 | 60.7 | 65.1 |

Bold = best, underlined = second-best. Our single model outperforms the combination of SD + DINOv2.

We extend semantic feature evaluation beyond pure vision tasks to vision-language grounding, testing on RefCOCO/RefCOCO+/RefCOCOg datasets. Our stop-word filtering technique proves crucial - without it, attention maps are dominated by background noise. With filtering, we achieve remarkable zero-shot performance that rivals specialized grounding models, but with much simpler architecture requirements. Our approach achieves 16.4% performance gain over previous training-free methods.

| Method | Vision Backbone | RefCOCO (oIoU) | RefCOCO+ (oIoU) | RefCOCOg (oIoU) | |||||

|---|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | val | test | ||

| Zero-shot methods w/o additional training | |||||||||

| Grad-CAM | R50 | 23.44 | 23.91 | 21.60 | 26.67 | 27.20 | 24.84 | 23.00 | 23.91 |

| Global-Local | R50 | 24.55 | 26.00 | 21.03 | 26.62 | 29.99 | 22.23 | 28.92 | 30.48 |

| Global-Local | ViT-B | 21.71 | 24.48 | 20.51 | 23.70 | 28.12 | 21.86 | 26.57 | 28.21 |

| Ref-Diff | ViT-B | 35.16 | 37.44 | 34.50 | 35.56 | 38.66 | 31.40 | 38.62 | 37.50 |

| TAS | ViT-B | 29.53 | 30.26 | 28.24 | 33.21 | 38.77 | 28.01 | 35.84 | 36.16 |

| RIFF (ours) | DiT | 38.29 | 43.07 | 34.01 | 39.58 | 44.78 | 35.01 | 39.45 | 39.53 |

| iRIFF (ours) | DiT | 39.23 | 44.05 | 35.34 | 41.71 | 45.24 | 35.95 | 40.25 | 40.38 |

Bold = best, underlined = second-best among training-free methods.

We demonstrate that our rectified flow features scale effectively to video understanding tasks. Using Mochi (a video rectified flow model), we extract features from the first frame and leverage SAM2's temporal propagation capabilities for consistent video segmentation. Our stop-word filtering technique proves even more crucial in video contexts where temporal consistency amplifies attention noise. The results show substantial improvements over existing training-free methods, establishing new benchmarks for zero-shot video referral segmentation.

| Method | J&F | J | F |

|---|---|---|---|

| Training-Free with Grounded-SAM | |||

| Grounded-SAM | 65.2 | 62.3 | 68.0 |

| Grounded-SAM2 | 66.2 | 62.6 | 69.7 |

| AL-Ref-SAM2 | 74.2 | 70.4 | 78.0 |

| Training-Free | |||

| G-L + SAM2 | 40.6 | 37.6 | 43.6 |

| G-L (SAM) + SAM2 | 46.9 | 44.0 | 49.7 |

| RIFF + SAM2 (ours) | 53.7 | 51.1 | 56.3 |

| iRIFF + SAM2 (ours) | 54.6 | 50.9 | 58.2 |

| Inv. | E-SW | SW | SAM2 | J&F | J | F | PA |

|---|---|---|---|---|---|---|---|

| ✓ | ✓ | ✓ | H | 54.6 | 50.9 | 58.2 | 60.2 |

| - | ✓ | ✓ | H | 53.7 | 51.1 | 56.3 | 57.3 |

| - | - | ✓ | H | 50.7 | 47.4 | 53.9 | 48.4 |

| - | - | - | H | 48.0 | 45.1 | 50.8 | 47.6 |

| ✓ | ✓ | ✓ | S | 50.1 | 46.7 | 53.5 | 60.2 |

Inv. = inversion, E-SW = extra stop words, SW = stop word filtering, SAM2 H/S = huge/small model, PA = point accuracy

Our RIFF and iRIFF methods provide powerful tools for extracting semantic features from rectified flow models, enabling advances in computer vision tasks such as semantic correspondence and referral segmentation. These capabilities have the potential to significantly enhance various applications including medical image analysis, robotics, autonomous systems, and assistive technologies for people with visual impairments.

By providing training-free, zero-shot methods that work across different domains (images and videos), our approach democratizes access to state-of-the-art semantic understanding capabilities. This is particularly valuable for researchers and practitioners who may not have access to large computational resources or extensive labeled datasets typically required for fine-tuning specialized models.

However, as with any advancement in computer vision and AI, there are potential ethical considerations. Improved semantic understanding capabilities could be misused for surveillance or privacy violation purposes. We emphasize the importance of deploying these technologies responsibly, with appropriate safeguards and consideration for privacy rights. We encourage the research community to continue developing ethical guidelines for the deployment of semantic feature extraction technologies and to consider the broader societal implications of these advancements.